In the era where fake news is the new news, everybody seems to be constantly seeking for (their version of) “truth”, or “fact”. It is quite ironic that while we are living in a world where information is more accessible than ever, we are less “informed” than ever. However, today, let’s take a break from all the chaos in real life and see if we can learn something about “truth” from the scientific world.

3 years ago, I spent my summer interning at a laboratory on social psychology, where I was exposed, for the very first time, to statistics and the scientific methodology. That was also when my naive image of “science” shattered. It was not noble as I thought, but, as real life, messy and complicated. My colleagues showed me the dark corners of psychology research, where people are willing to do whatever it takes to have a perfect p-value and get their paper published (for example, the scandal of Harvard professor of psychology Marc Hauser). If you are somebody working in the science world, you must not be so surprised: social science, and psychology in particular, is plagued with misconduct and fraud. Reproducibility is a huge problem, as proved by the reproducibility project, where 100 psychological findings were subjected to replication attempts. The results of this project were less than a ringing endorsement of research in the field: of the expected 89 replications, only 37 were obtained and the average size of the effects fell dramatically. At the end of the internship, I wrote an article with the title “Is psychology a science ?”, where I stated in my conclusion “Pyschology remains a young field in search for a solid theoretical base, but that can not justify for the lack of rigor in the research method. Science is about truth and not about being able to publish.”

“Science is about truth”.

Is it ?

This seemingly evident statement came back to hunt me 3 years later, when I came across this quote by Neil deGrasse Tyson, a scientist that I respect a lot:

The good thing about science is that it’s true whether or not you believe in it.

That was his answer for the question “What do you think about the people who don’t believe in evolution ?”.

If we put it in the context, where he already commented on the scientific method, this phrase becomes less troubling. However, I still find it very extreme and even misleading for layman people, just like my statement 3 years ago.

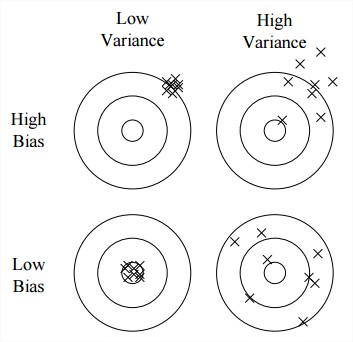

For me, science is about skepticism. It is more about not being wrong than being true. A scientific theory never aspires to be “final “, it will always be subject to additional testing.

To better understand this statement, we need to go back to the root of the scientific method: statistics.

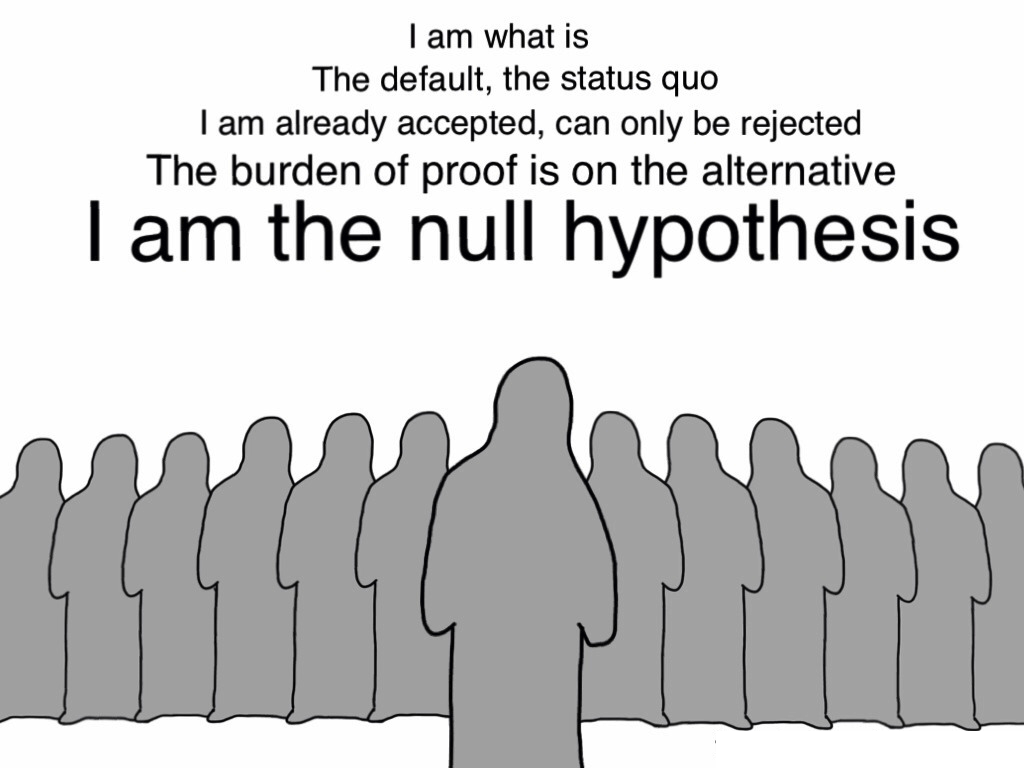

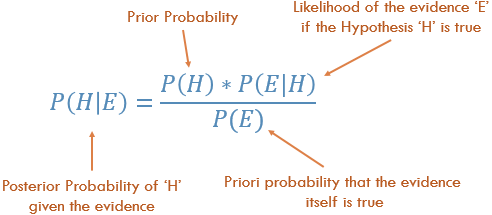

Let’s take an example in clinical trial: supposing that you want to test a new drug. You find a group of patients, give half of them the new drug and the rest a placebo. You measure the effect in each group and compare if the difference between those 2 groups is significant or not by using a hypothesis test.

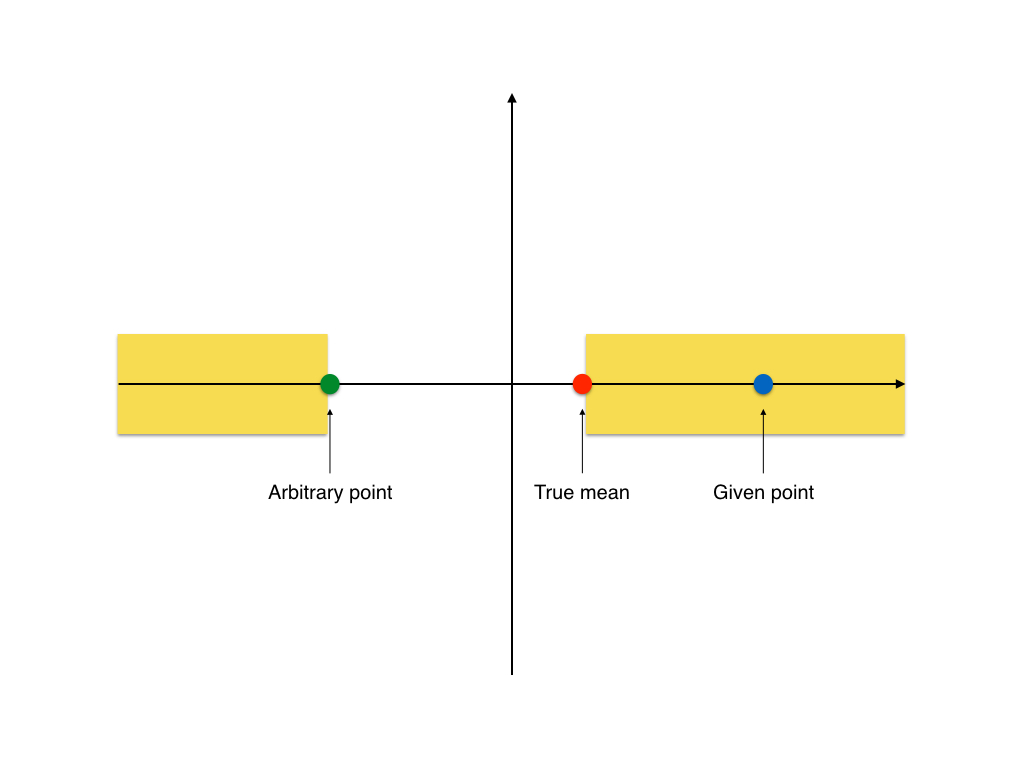

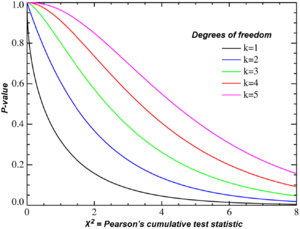

This is where the famously misunderstood p-value comes into play. It is the probability, under the assumption that there is no true effect or no difference, of collecting data that shows a difference equal to or greater than what we observed. For many people (including researchers), this definition is very counter-intuitive because it does not do what they are expecting: p-value is not a measure about the effect size, it does not tell you how right you are or how big is the difference, it just shows you a level of skepticism. A small p-value simply states that we are quite surprised with the difference between 2 groups, given that we are not expecting it. It is only a probability, so if someone try a lot of hypothesis on the data, eventually they will get something significant (this is what known as the problem of multiple comparisons, or p-hacking).

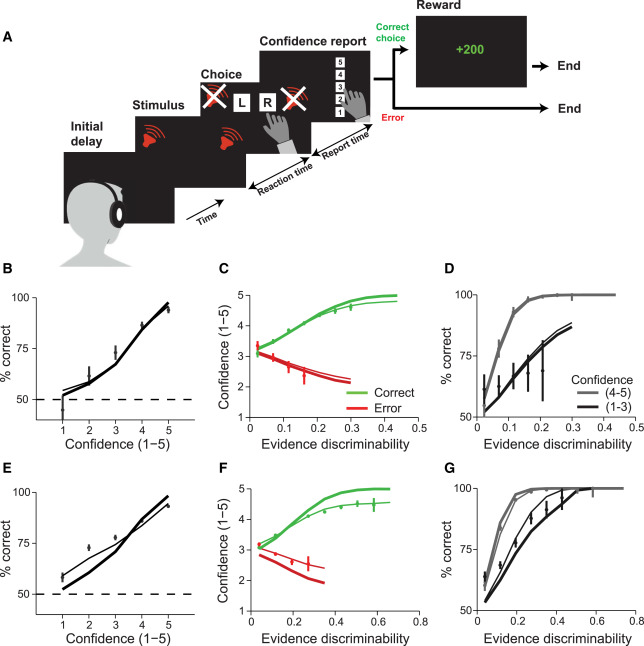

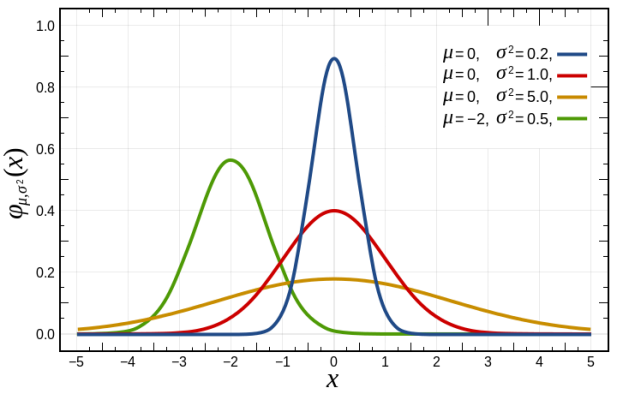

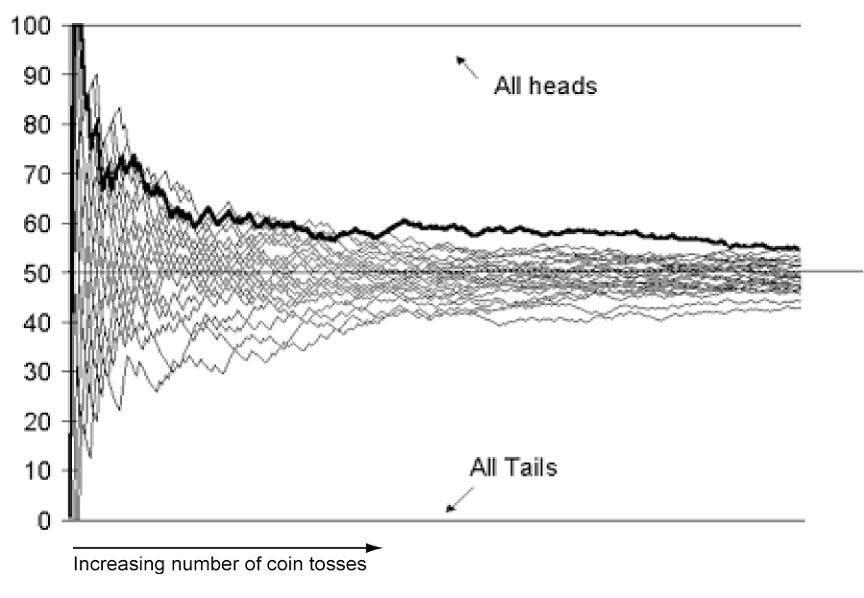

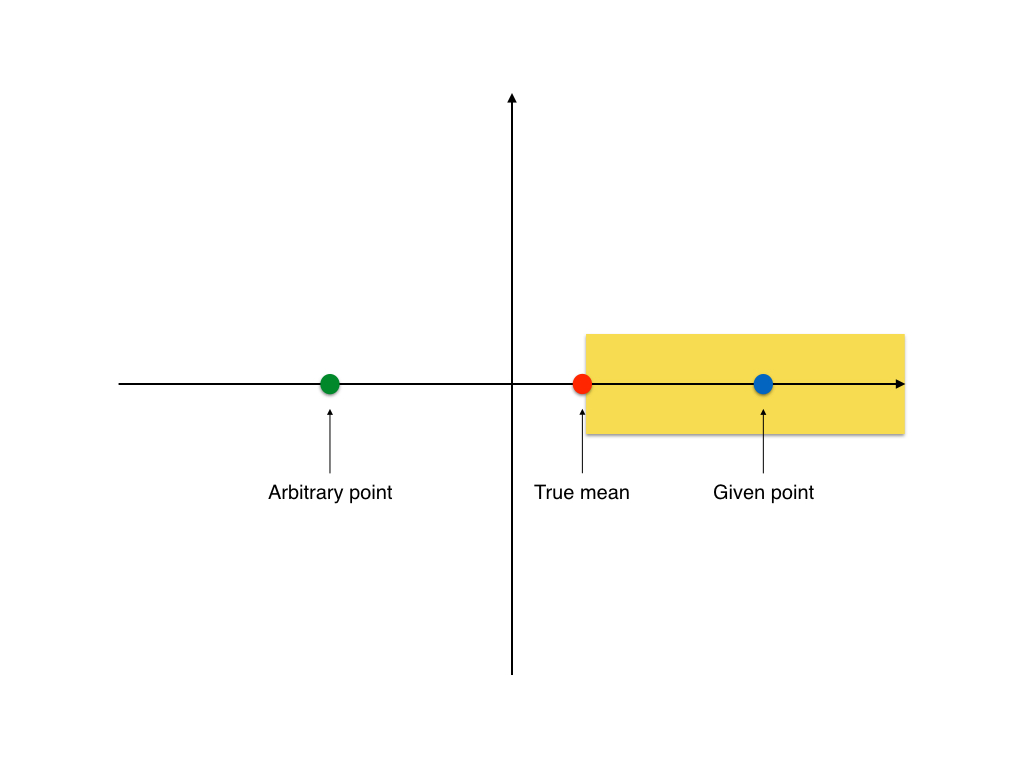

The whole world of research is driven by this metric. For many journals, a p-value less than 5% is the first criteria for a paper to be reviewed. However, things are more complicated than that. As I mentioned earlier, p-value is about statistical significance, not practical significance. If a researcher collects enough data, he will eventually be able to lower the p-value and “discover” something, even if the scope of it is extremely tiny that it doesn’t make any impact in real life. This is where we need to discuss about the effect size and more importantly, the power of a hypothesis test. The former, as it names suggests, is the size of the difference that we are measuring. The latter is the probability that a hypothesis test will yield a statistically significant outcome. It depends on the effect size and the sample size. If we have a large sample size and want to measure a reasonable effect size, the power of the test will be high and vice versa, if we don’t have enough data but aim for a small effect size, the power will be low, which is quite logical: we can’t detect a subtle difference if we don’t have enough data. We can’t just throw a coin 10 time and said that because there are 6 heads, the coin must be biased.

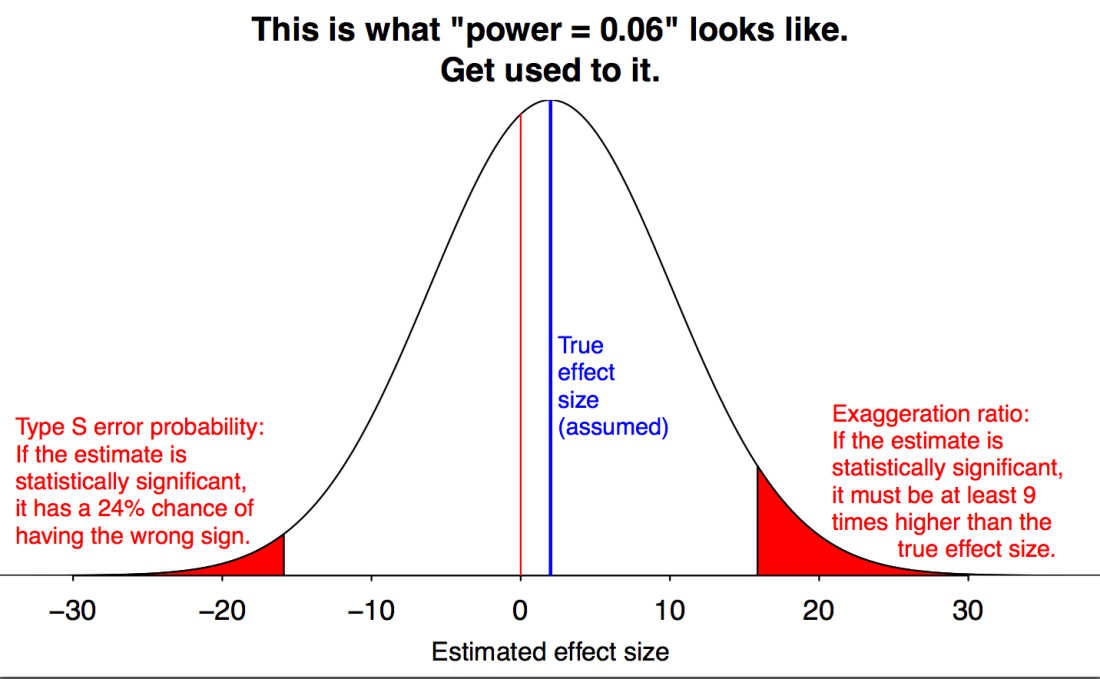

In fields where experiments are costly (social science, pharmaceutical,…), the small sample size led to a big problem of truth inflation (or type M error). This is when the hypothesis test has a weak power and thus can’t detect any reliable difference.

In the curve above, we see that our data needs to have an effect size 9 times greater than the actual effect size to be statistically significant.

The truth inflation problem turns out to be quite “convenient” for researchers: they get a significant result with a huge effect size! This is also what the journals are looking for: “groundbreaking” results (large effect size result in some research field with little prior research). And it is not rare.

All these discussions is to show you that scientific methodology is not definite. It is based on statistics, and statistics is all about uncertainty, and sometimes it gets very tricky to do it the right way. But it needs to be done right. Some days it is hard, some days it is nearly impossible, but that’s the way science works.

To conclude, I think that science is not solely about truth, but about evaluating observations. This is where we can go back to the real world: in this era where we are drowning in data, we also need to have a rigorous approach to process them: cross-check the information from multiple sources, be as skeptical as possible to avoid selection bias, try not to be wrong and most importantly, be honest to one self, because at the end of the day, truth is a subjective term.